Main Reference: https://arxiv.org/abs/2405.08793

Have you ever thought about the word “causal” in the sentence we’ve all heard: “Smoking causes lung cancer”?

It sounds pretty simple, right? The way I see it, at least, is the following: if someone has lung cancer and they smoke, we assume smoking caused it.

smoke --- causes ---> cancer

Okay, but what about the many other possibilities for the cancer to have happened? For example, genetic mutations, alcohol consumption, aging, unhealthy lifestyle, radiation exposure, etc all cause cancer.

Let’s imagine the scenario in which we’re analyzing an 80-year-old man who has smoked since he was 21. In addition, every time he smoked at night, he would always have at least three cans of beer, and he worked throughout the day on a farm, being exposed to the sunlight for 8 hours a day, and he was obese by the time he was diagnosed with lung cancer.

How can we precisely tell that the cigarettes were responsible for this? Don’t get me wrong; I’m not a denier that cigarettes are not good for our health, but this question is fascinating. How can we isolate all those other variables to say that if he had not smoked, his chances of having lung cancer would be smaller?

It is thus an important, if not the most important, job for practitioners of causal inference to convincingly argue why some variables are included and other were omitted. They also must argue why some of the included variables are considered potential causes and why they chose a particular variable as an outcome. This process can be thought of as defininig a small universe in which causal inference must be performed.

Probabilistic Graphical Models

A probabilistic graphical model, also referred to as a Bayesian graphical model, is a directed graph ![]() , where

, where ![]() is a set of vertices and

is a set of vertices and ![]() is a set of directed edges. Each node

is a set of directed edges. Each node ![]() corresponds to a random variable, and each edge

corresponds to a random variable, and each edge ![]() represents the dependence of

represents the dependence of ![]() on

on ![]() .

.

For each node ![]() , we define a probability distribution

, we define a probability distribution ![]() over this variable conditioned on all the parent nodes

over this variable conditioned on all the parent nodes

![]()

At first, when I looked at this equation, I didn’t completely understand it. Let’s break into parts

1. Node ![]() : Here,

: Here, ![]() represents a node, and

represents a node, and ![]() is the set of all nodes in a graph. This means

is the set of all nodes in a graph. This means ![]() is an individual node that belongs to the set of nodes,

is an individual node that belongs to the set of nodes, ![]() .

.

2. Probability distribution ![]() : This represents a conditional probability distribution for node

: This represents a conditional probability distribution for node ![]() given its parent nodes

given its parent nodes ![]() . Essentially, it’s saying that the value or state of node

. Essentially, it’s saying that the value or state of node ![]() is influenced by its parent nodes, and this distribution captures how

is influenced by its parent nodes, and this distribution captures how ![]() depends on them.

depends on them.

3. Parent nodes ![]() : The function

: The function ![]() refers to the set of parent nodes of

refers to the set of parent nodes of ![]() . In other words,

. In other words, ![]() represents all the graph nodes with a directed edge pointing to

represents all the graph nodes with a directed edge pointing to ![]() . These parent nodes directly influence the state of node

. These parent nodes directly influence the state of node ![]() .

.

4. Set notation ![]() : This equation defines the set of parent nodes. It means that

: This equation defines the set of parent nodes. It means that ![]() is the set of nodes

is the set of nodes ![]() that are in

that are in ![]() (the set of all nodes), where there exists an edge

(the set of all nodes), where there exists an edge ![]() . In graph terminology,

. In graph terminology, ![]() is the set of edges, and

is the set of edges, and ![]() indicates an edge going from node

indicates an edge going from node ![]() to node

to node ![]() . This implies that

. This implies that ![]() is a parent of

is a parent of ![]() .

.

In summary:

is a node in a graph.

is a node in a graph. is the set of parent nodes for

is the set of parent nodes for  (i.e., nodes that have edges leading to

(i.e., nodes that have edges leading to  ).

). is the probability of

is the probability of  conditioned on its parents.

conditioned on its parents.- The set definition

means that

means that  consists of all nodes

consists of all nodes  that have directed edges to

that have directed edges to  .

.

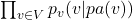

We can then write a joint distribution over all the variables as

![]()

With ![]() , a set of all conditional probabilities

, a set of all conditional probabilities ![]() ‘s, we can denote any probabilistic graphical model as a triplet

‘s, we can denote any probabilistic graphical model as a triplet ![]()

1. Joint Distribution ![]() :

:

- The equation represents the joint probability distribution over all nodes in the graph.

denotes the graph’s joint probability distribution for all the nodes (variables). This is the overall probability of observing a configuration of all the variables.

denotes the graph’s joint probability distribution for all the nodes (variables). This is the overall probability of observing a configuration of all the variables.

2. Set of Nodes ![]() :

:

represents the set of all graph nodes (or variables). Each node

represents the set of all graph nodes (or variables). Each node  is an individual variable whose value depends on the values of its parent nodes.

is an individual variable whose value depends on the values of its parent nodes.

3. Conditional Probability ![]() :

:

is the conditional probability of node

is the conditional probability of node  given its parent nodes

given its parent nodes  . This expresses how the state of node

. This expresses how the state of node  depends on the states of its parents in the graph.

depends on the states of its parents in the graph.- It indicates that the value of

is not independent but influenced by its parents (nodes that have directed edges pointing to

is not independent but influenced by its parents (nodes that have directed edges pointing to  ).

).

4. Parent Nodes ![]() :

:

refers to the set of parent nodes for a node

refers to the set of parent nodes for a node  . These are the nodes that have a directed edge pointing to

. These are the nodes that have a directed edge pointing to  . In other words, the state of node

. In other words, the state of node  is influenced by the states of these parent nodes.

is influenced by the states of these parent nodes.- Specifically,

is a formal definition of the parent set. Here:

is a formal definition of the parent set. Here:

is the set of all nodes in the graph.

is the set of all nodes in the graph. is the set of edges in the graph.

is the set of edges in the graph.- The expression

means a directed edge from node

means a directed edge from node  to node

to node  , indicating that

, indicating that  is a parent of

is a parent of  .

.

5. Overall Meaning:

- The product

is a way of expressing the joint distribution of all the variables in the graph.

is a way of expressing the joint distribution of all the variables in the graph. - It says that the joint probability of all nodes can be computed by multiplying the individual conditional probabilities of each node given its parents.

- The idea is that the graph structure encodes dependencies between the variables. The joint distribution is factorized in terms of these conditional dependencies, reflecting the local structure of the graph.

From the joint distribution, we can derive conditional distributions for various subsets of the variables by marginalizing the variables that we are not interested in. Marginalization involves summing out the unwanted variables, and conditional probability is used to express the relationship between the remaining variables.

Step 1: Marginalizing a Variable

Let’s say we are not interested in a particular node ![]() in the graph. To marginalize over this variable, we sum out

in the graph. To marginalize over this variable, we sum out ![]() from the joint distribution

from the joint distribution ![]() . This means we consider the total probability of the other variables while ignoring

. This means we consider the total probability of the other variables while ignoring ![]() . The result is:

. The result is:

![]()

Here:

represents the entire set of variables.

represents the entire set of variables. is a specific value we are conditioning on.

is a specific value we are conditioning on. is the marginal probability distribution over all variables except

is the marginal probability distribution over all variables except  .

.- The summation over

indicates that we are summing out the variable

indicates that we are summing out the variable  .

.

Note: when we say “we are summing out the variable ![]() “, we are note “excluding its value” in the sense of ignoring it or discarding it enterily. Instead, summing out

“, we are note “excluding its value” in the sense of ignoring it or discarding it enterily. Instead, summing out ![]() means that we are eliminating the dependency on

means that we are eliminating the dependency on ![]() by summing over all possible values that

by summing over all possible values that ![]() can take. This process integrates out (for continous variables) or sums out (for discrete variables) the variable from the joint distribution.

can take. This process integrates out (for continous variables) or sums out (for discrete variables) the variable from the joint distribution.

This step reduces the joint distribution to the marginalized distribution over the remaining variables.

Step 2: Turning Joint Probability into Conditional Probability

We can also express a conditional probability by using the definition of conditional probability. Remember,

![]()

To condition on a specific variable, say ![]() , we divide the joint probability by the marginal probability of

, we divide the joint probability by the marginal probability of ![]() . The conditional probability of the remaining variables, given

. The conditional probability of the remaining variables, given ![]() , is:

, is:

![]()

Here:

represents the conditional probability of all the remaining variables in

represents the conditional probability of all the remaining variables in  given that

given that  takes some specific value.

takes some specific value. is the marginal probability of

is the marginal probability of  .

.

This equation shows how to convert the joint distribution into a conditional distribution by normalizing with the marginal probability of ![]() .

.

Step 3: Marginalizing with Conditional Probability

Now, we can combine marginalization and conditional probability to express the marginalized probability explicitly using the definition of conditional probability.

First, let’s express the marginal probability ![]() as follows:

as follows:

![]()

Next, using the conditional probability expression, we know that:

![]()

Finally, we can rewrite this as:

![]()

Here:

is the marginalized probability over the remaining variables.

is the marginalized probability over the remaining variables. is the marginal probability of the variable

is the marginal probability of the variable  .

.

This shows how the marginal probability can be written as a weighted sum of the conditional probabilities, with the weights being the marginal probabilities of the variables that were marginalized out.

Step 4: Interpreting Marginalization

The process of marginalization can be understood as computing the weighted sum of the conditional probabilities of the remaining variables, where the weights are given by the marginal probabilities of the variable being marginalized. Essentially, each possible value of ![]() contributes to the final marginal distribution, with its contribution weighted by its marginal probability

contributes to the final marginal distribution, with its contribution weighted by its marginal probability ![]() .

.

Summary

- Marginalization corresponds to computing a weighted sum of the conditional probabilities, where the weights are the marginal probabilities of the variables being marginalized.

- Marginalization involves summing out a variable (or set of variables) from the joint distribution.

- We can convert a joint probability into a conditional probability using the definition of conditional probability.

- By combining these ideas, we can express the marginalized probability distribution in terms of conditional probabilities and the marginal probabilities of the marginalized variable.